The embedded inline Voice AI widget lets you place a conversational assistant directly in your page flow. It supports microphone capture, real time speech to text, natural responses with text to speech, transcripts, and smooth handoffs to human agents. Controls respect browser permissions and mobile rules, while admins manage branding, prompts, and routing for a cohesive CX.

Inline voice chat component that embeds inside page content

Push to talk microphone with clear recording states and timers

Real time speech to text with live captions

Natural voice responses via text to speech with selectable voices

Full transcript view with copy and download options

Context handoff to human agents inside Conversations

Custom branding, prompt settings, and welcome messages

Multi language detection and locale aware responses where supported

Respectful browser permission handling and autoplay safe playback

Analytics for starts, completions, drop offs, and escalations

Place the inline widget near key tasks like pricing, booking, or checkout to reduce friction

Keep a concise welcome prompt that hints at what the assistant can do

Enable text to speech only where it adds clarity, and default to text on quiet pages

Offer a visible switch to type instead of talk for accessibility and noisy environments

Review transcripts weekly to refine intents and improve answer quality

Route high intent phrases to a live agent queue with tags for priority follow up

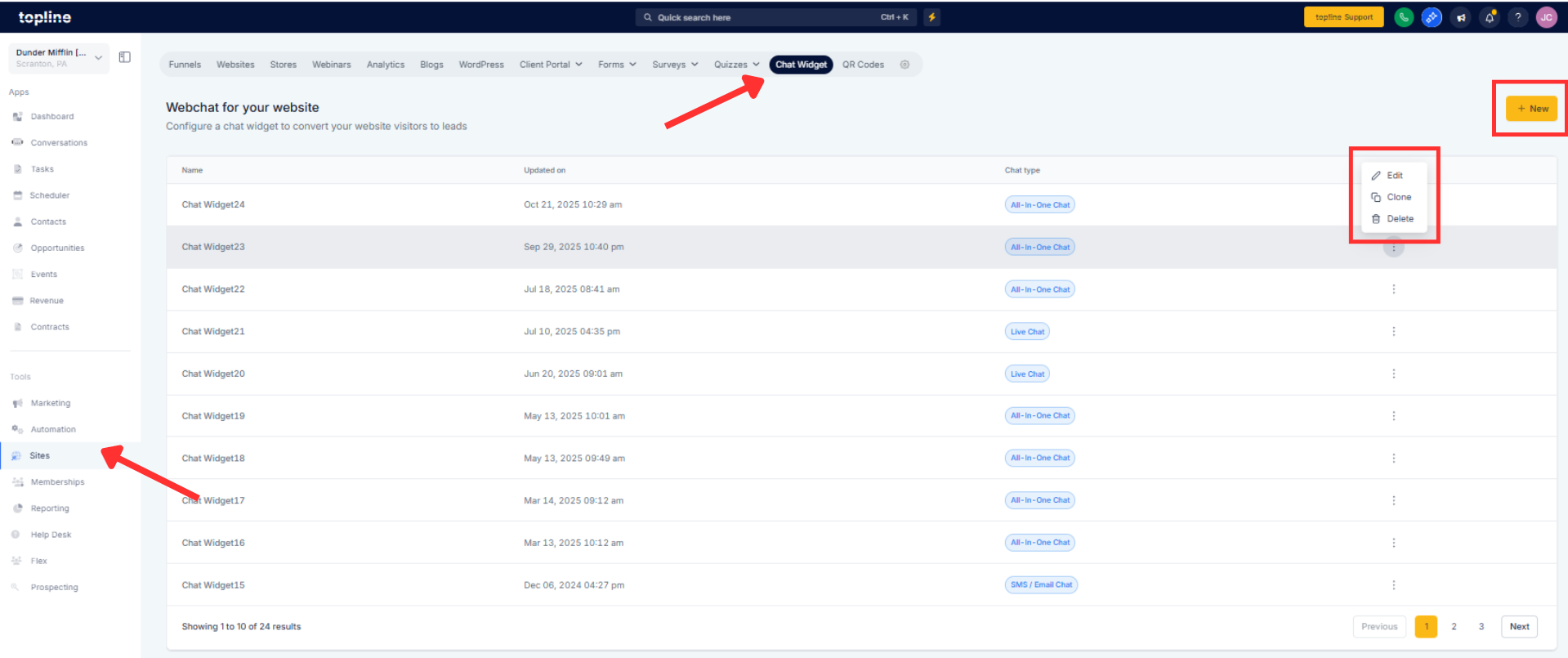

Step 1

Open Sites or Pages and select the page where you want the inline assistant.

Step 2

Add the Voice AI chat widget block and choose Inline placement: Configure branding, welcome prompt, default language, and escalation rules.

How is the inline voice widget different from a floating chat?

It sits inside your page layout near key content, avoiding overlays while sharing full transcripts with Conversations for seamless follow ups.

What audio permissions are required?

The browser requests microphone access on first use, and playback respects autoplay policies and the device’s volume or silent mode.

Does it support multiple languages?

Yes, it can detect supported languages and respond accordingly, with transcripts saved using the detected locale.

Can visitors switch between voice and typing?

Yes, they can use push to talk with live captions or type inline, and both modes are captured in one transcript thread.

How are handoffs to human agents handled?

Escalation rules route into Conversations with transcript context, tags, and assignments to guide priority handling.